Configuring ComfyUI AI for distributed use via Tailscale

Project information

- Category: AI

- Project date: 23 February, 2025

Firstly, what is ComfyUI?

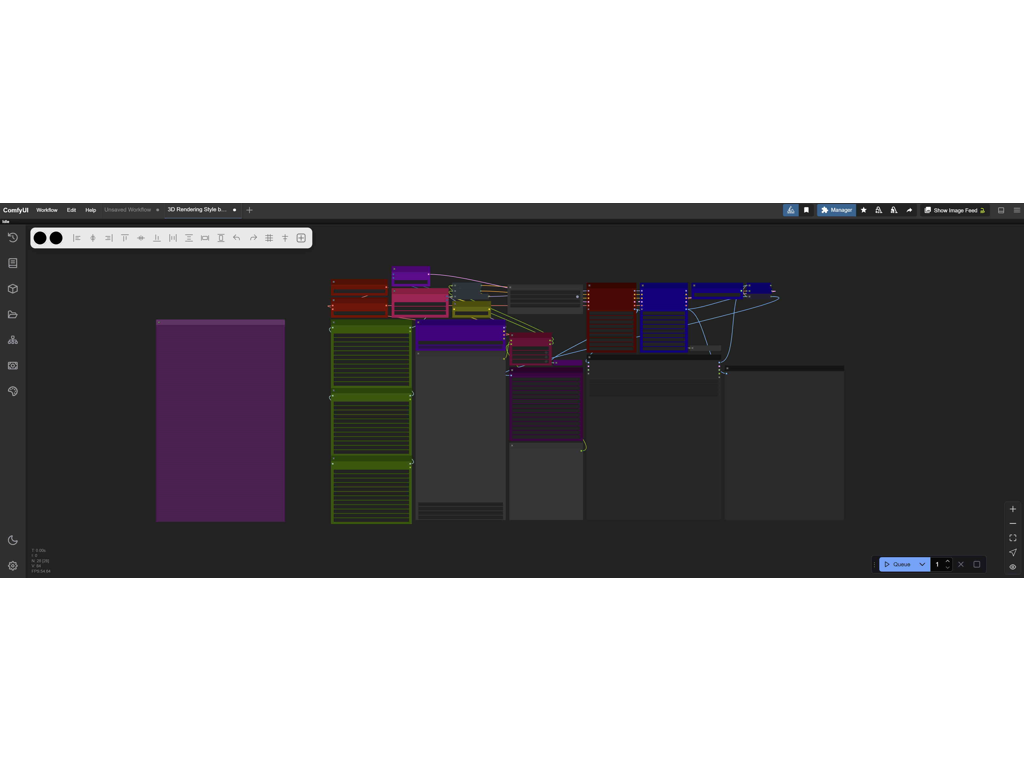

ComfyUI lets you design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart based interface.

What is Tailscale?

Tailscale makes creating software-defined networks easy: securely connecting users, services, and devices.

Why I Built This

As a developer experimenting with generative AI, I hit two hard limits: mobile devices couldn't handle massive SDXL checkpoints (some exceeding 16GB), and cloud services were bleeding my budget dry with capped resolutions, limited batching and $100+/month fees. My wake-up call came when I realized I was paying premium prices for watered-down versions of open-source tools wrapped in pretty UIs.

The solution sat in my home office – a gaming PC with an RTX 3090. Why let that horsepower idle when I could turn it into my personal AI cluster? By combining ComfyUI's workflow flexibility with Tailscale's secure networking, I built a system that lets me:

Security wasn't an afterthought either. The setup uses Google OAuth as its front door (far tougher to crack than API keys) and Tailscale's encrypted mesh networking instead of risky port forwarding. Now I test bleeding-edge models within hours of their GitHub releases rather than waiting months for cloud providers to implement them – all while knowing my training data never leaves my own hardware.

Steps to Reproduce

I will be going over the extremely simple setup solution to get things working, avoiding more complex stuff like Cloudflare tunneling for this article.

Firstly, you're going to need to grab ComfyUI and some diffusion models and LORAs for it. Plenty of resources online to accomplish that, definitely recommend checking out CIVITAI if you're new to stable diffusion.

Once you've got your ComfyUI environment setup and running (make sure you generate some images first just to make sure everything's good to go!) grab Tailscale

All you need to do with Tailscale to get started in sign in. To make things easy, just login using Google and you'll automatically use Googles authentication services. Free and easy 2-Auth right off the bat. There's a ton more in the way of security you can do, but I'll leave that to you to explore, cybersecurity is a massive rabbit hole!

Once Tailscale is setup open up CMD (command prompt) and run the following command:

tailscale up --advertise-exit-node

This will give you a login URL - complete it in your browser.

On Mobile/Remote Devices:

Once you're all good there, we just need a new bat file to run ComfyUI.

The new bat is based off of ComfyUIs run_nvidia_gpu.bat file. If you're a madman and want to run on CPU, just make sure you add in --cpu to the ComfyUI startup command.

@echo off

set COMFYUI_LISTEN=0.0.0.0

set COMFYUI_PORT=8188

REM Windows firewall rule

powershell -Command "New-NetFirewallRule -DisplayName 'ComfyUI' -Direction Inbound -Action Allow -Protocol TCP -LocalPort %COMFYUI_PORT%" 2>nul

.\python_embeded\python.exe -s ComfyUI\main.py --windows-standalone-build --listen --port %COMFYUI_PORT%

pauseThis is a bit of a loaded upgrade, but it has some key components to it:

Firewall Rule Setup:

powershell -Command "powershell -Command "New-NetFirewallRule -DisplayName 'ComfyUI' -Direction Inbound -Action Allow -Protocol TCP -LocalPort %COMFYUI_PORT%" 2>nul"Creates a Windows firewall rule named "ComfyUI" to allow inbound TCP connections on port 8188 (this can be changed to any port you want). The 2>nul suppresses errors (e.g., if the rule already exists).

Launch ComfyUI:

Pause

Now that we have that updated bat setup we just need to port forward 8188 (or whatever port you're using) on your router. This is where you'd tunnel through Cloudflare and do some deeper security related stuff.

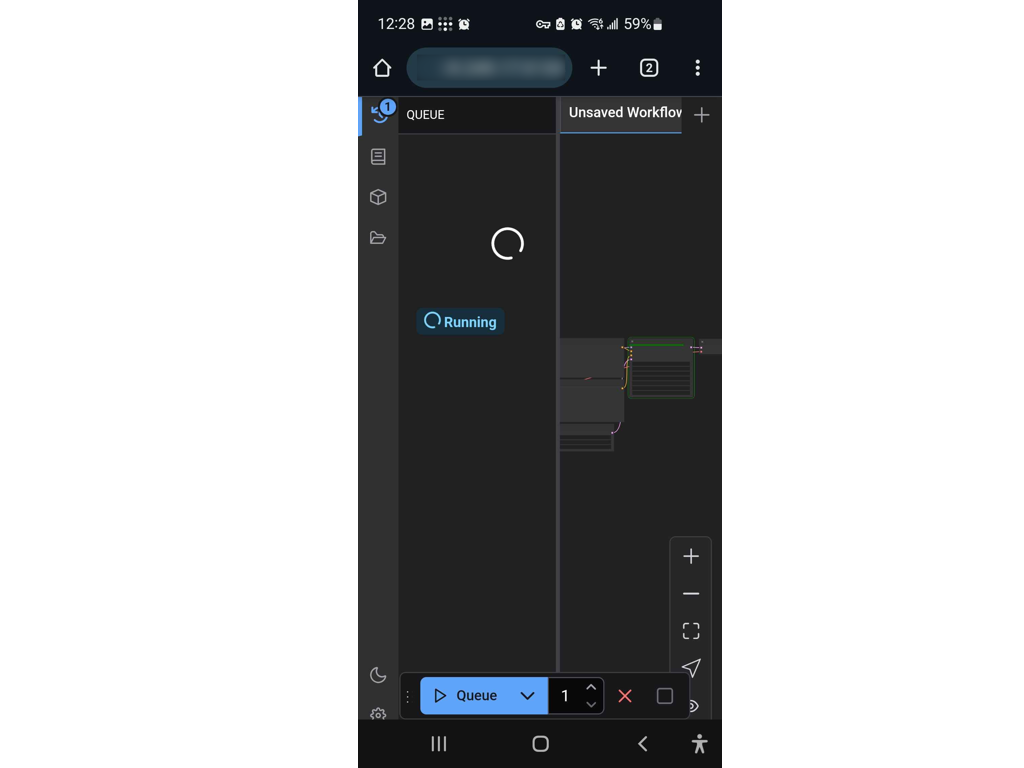

Once you've got the port open, check the IP of the device by right clicking Tailscale in the tray. That IP is what your phone will connect to. Make sure your phone (or other device) is logged into the Tailscale app and is an enabled device, then jump over to your browser, type in the ip:port and you should load into ComfyUI without a problem!

What I Learned

1. Hardware Democratization: A single GPU can become a scalable AI workstation. By decoupling compute from interface devices, I broke free from vendor lock-in and mobile hardware limitations.

2. Security Synergy: Combining enterprise-grade tools like Google OAuth, Cloudflare, etc. With Tailscale’s encrypted mesh networks proved more robust than API keys or basic port forwarding, all while remaining frictionless for end user experience.

3. Cost Calculus: Cloud AI services often charge 10-100x the actual electricity cost of local inference. For experimental workflows (like generating 500 variations of a node graph), this math becomes indefensible.

4. Workflow Fluidity: Editing ComfyUI graphs on a phone while queuing renders on a home GPU redefined "mobile development." I plan to prioritize interface/backend decoupling in all tools I build.

5. Open-Source Leverage: By building atop ComfyUI and Tailscale, I achieved in 40 hours what would’ve taken 400+ to develop from scratch—a lesson in standing on the shoulders of giants.

Most importantly, this project revealed that constraints breed creativity. When cloud costs felt inevitable, I found myself limiting experiments to stay within budget. Owning the stack unleashed iterative madness—failed renders became free lessons rather than financial liabilities. While I’ll still likely use cloud services for some things, my core philosophy has shifted: if it’s critical to my workflow, it needs to run on hardware I control if it makes sense to do so.

As a technical artist I believe there's a lot I can bring with me into the games industry with this newfound knowledge, a case that comes to mind instantly is hosting perf-graphs and other extremely useful information and automation pipeline kickoff functions leveraging Tailscale to give other members of my team encrypted access to data that needs to remain confidential and secure. This project has been extremely beneficial to learn from and I hope the knowledge shared helps you on your journey with AI!